For most of the past century, electricity was the currency of technological growth. The power grid determined industrial competitiveness, geopolitical strategy, and even social stability. But in the 21st century, the new electricity is compute.

Artificial Intelligence (AI), cloud computing, hyperscale data centers, and high-performance GPUs have transformed compute from a background utility into a frontline asset class. The market now treats computing power not just as a service, but as a tradable, scarce, and strategically vital resource, no different from oil in the 20th century or gold in the 19th.

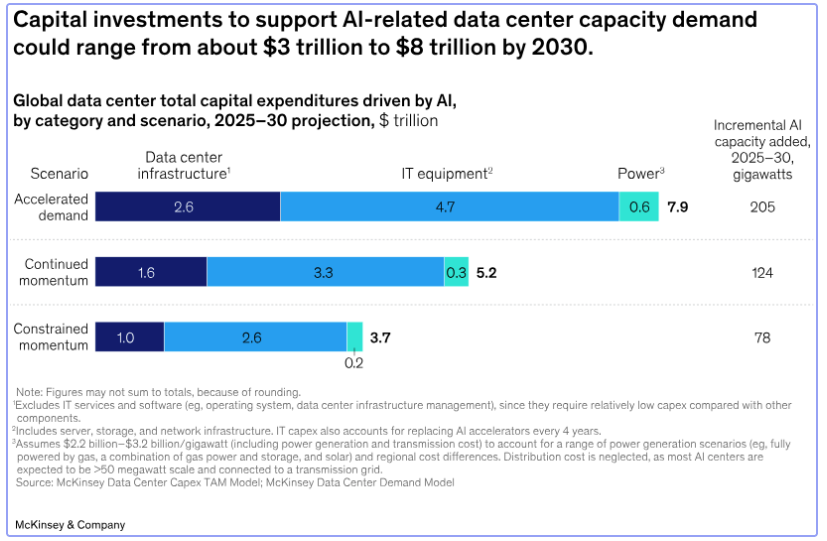

The financialization of compute is unfolding at unprecedented speed. Venture capital, sovereign wealth funds, and enterprises are pouring billions into GPU clusters, data center capacity, and decentralized compute networks. Governments are subsidizing infrastructure, restricting exports, and racing to secure local compute sovereignty.

The result? Compute is no longer just an IT line item. It is becoming a global capital magnet, an asset class shaping markets, investment strategies, and the architecture of the future digital economy.

Compute power has become the new necessity; everyone wants it, and everyone is willing to pay big money for it. Companies are spending hundreds of billions of dollars to build data centers and buy powerful compute chips. This isn’t just about technology anymore. It’s about who controls the future.

Why Everyone Wants compute Power

Think of compute power like electricity was 100 years ago. Back then, the companies that controlled electricity controlled everything else. Today, compute power works the same way. Companies use it to run artificial intelligence (AI), and power the apps and websites we use every day.

The explosion of AI adoption in 2023–2025 created an unprecedented surge in demand for GPUs. Training and inference workloads for large language models, generative AI, and multi-modal agents demand tens of thousands of high-performance chips running around the clock.

Bloomberg Intelligence underscores the scale of scarcity: there’s now a 12–24 month lag between power demand and delivery. This mismatch delays new compute facilities and pushes up prices for existing capacity.

The result? GPUs are trading like commodities. Enterprises are hoarding H100 clusters, investors are securitizing compute contracts, and DePIN (Decentralized Physical Infrastructure Network) projects are turning idle GPUs into yield-bearing assets.

Just as oil futures and REITs (real estate investment trusts) structured energy and property into capital markets, compute marketplaces are emerging as the financialization layer for compute.

The Numbers Are Mind-Blowing

Let’s look at how big this market really is. The numbers will shock you.

The global data center market made $347.6 billion in 2024. Experts predict it will grow to $652 billion by 2030. That’s almost doubling in just six years! Some research companies think it could grow even faster, reaching $535.6 billion by 2029 with growth rates of 15.6% every year.

But here’s where it gets really interesting. Companies are planning to spend $750 billion on AI-focused compute infrastructure by 2026. That’s more money than the entire GDP of most countries.

The Big Picture:

-

Data center market: $347.6 billion in 2024, growing to $652 billion by 2030

-

AI infrastructure spending: $750 billion by 2026

-

Growth rate: 11-15% every year

-

Market doubling time: About 6 years

Tech Giants Are Spending Like Never Before

Microsoft leads the pack with plans to spend $80 billion in 2025 just on AI data centers. That’s $80 billion in one year! Google (Alphabet), Amazon, and Meta are also spending big. Together, these four companies will spend more than $215 billion on compute infrastructure in 2025.

Why are they spending so much? Because they know that whoever controls the most compute power wins the AI race. It’s like an arms race, but instead of weapons, companies are buying servers and compute chips.

Microsoft’s $80 billion plan focuses mainly on the United States. The company wants to build data centers across America to make sure American AI stays ahead of competitors from other countries. This spending shows how important national compute power has become for countries wanting to stay competitive.

Other tech giants follow similar strategies. They buy land, build massive data centers, and fill them with thousands of powerful computes. These facilities run 24 hours a day, seven days a week, processing AI requests and storing data for billions of users worldwide.

Corporate Spending Facts:

-

Microsoft: $80 billion in 2025 for AI data centers

-

Combined spending by top 4 tech companies: $215+ billion annually

-

Focus: Building computing power faster than competitors

-

Strategy: Whoever has the most power wins

The World is Building compute Fortresses

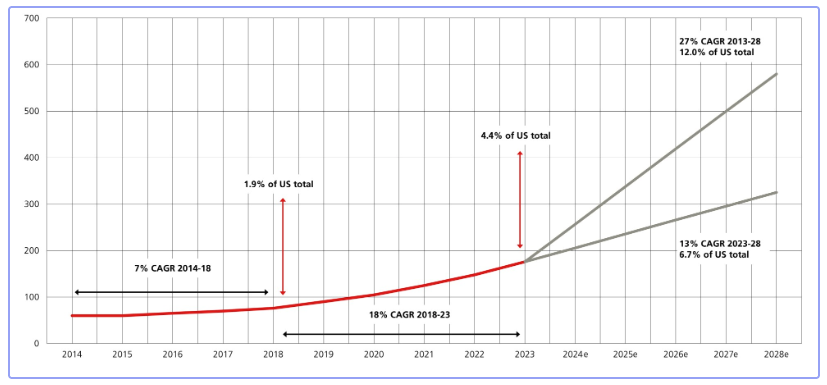

Right now, there are over 11,800 data centers around the world. The United States leads with 5,400 facilities – that’s almost half of all data centers globally. These aren’t small buildings. Many are as big as airplane hangars, filled with thousands of servers humming away day and night.

The biggest facilities are called “hyperscale” data centers. These giants handle 75% of all global computing work. Companies like Google, Amazon, and Microsoft own most of these massive facilities.

But the action is moving to Asia. The Asia-Pacific region will add almost half of all new computing capacity by 2025. India stands out as the biggest opportunity. The country expects $25 billion in data center investments by 2030, which will increase India’s computing power to over 4,500 megawatts.

Why is Asia growing so fast? Several reasons:

-

Cheaper electricity costs

-

Lots of available land

-

Growing economies that need more computing power

-

Governments that support tech infrastructure

Global Infrastructure Facts:

-

Total data centers worldwide: 11,800+

-

US facilities: 5,400 (nearly 50% of global total)

-

Hyperscale centers handle: 75% of global capacity

-

Asia-Pacific growth: 50% of new capacity by 2025

-

India investment: $25 billion by 2030

Compute as the New Capital Magnet

Why has compute crossed this threshold from infrastructure to an asset class? The answer lies in scarcity, capital demand, and geopolitics.

First, GPUs are scarce. Lead times for top-tier accelerators can stretch over a year, and even when available, they come at a premium. Enterprises willing to pay hundreds of millions are often forced to wait. Scarcity has created a premium market where compute is treated like a precious commodity.

Second, compute attracts capital because it underpins growth. Just as real estate became the basis for mortgages, securitization, and REITs, compute clusters are becoming the basis for investment vehicles. Hedge funds and venture capitalists are pouring billions into GPU farms, data centers, and decentralized compute projects. Sovereign wealth funds see compute capacity as a strategic hedge, much like oil reserves once were.

Third, compute is geopolitical. Nations are investing heavily in domestic data centers, subsidizing infrastructure, and restricting GPU exports. Canada, for instance, announced a $300 million subsidy package in 2024 to secure compute resources for its AI sector. In the United States and China, advanced GPUs are now treated as strategic technologies, with export controls and sanctions shaping global supply. Compute have become a lever of national power, woven into industrial policy and foreign relations.

Beyond Moore’s Law: The Hardware Question

Even if supply chains expand, the traditional roadmap of Moore’s Law is no longer sufficient to meet demand. Shrinking transistors has given us decades of exponential growth, but physics is catching up. AI’s compute needs are simply too large to be met by incremental gains in chip density.

This is why the industry is exploring radical alternatives. Neuromorphic chips, modeled on the architecture of the brain, promise to perform certain tasks with orders of magnitude less energy. Analogue computing, long dismissed as impractical, is finding new relevance in AI workloads where matrix operations dominate. And all-optical computing, where photons rather than electrons carry information, offers the possibility of slashing the energy costs associated with electro-optical conversions.

These innovations represent not evolutionary steps but revolutions in energy efficiency. The industry must move beyond measuring AI progress in floating-point operations or parameter counts and instead adopt “watts per inference” as the core benchmark. Efficiency, not brute force, will determine the sustainability of AI’s growth.

The Energy Problem is Getting Scary

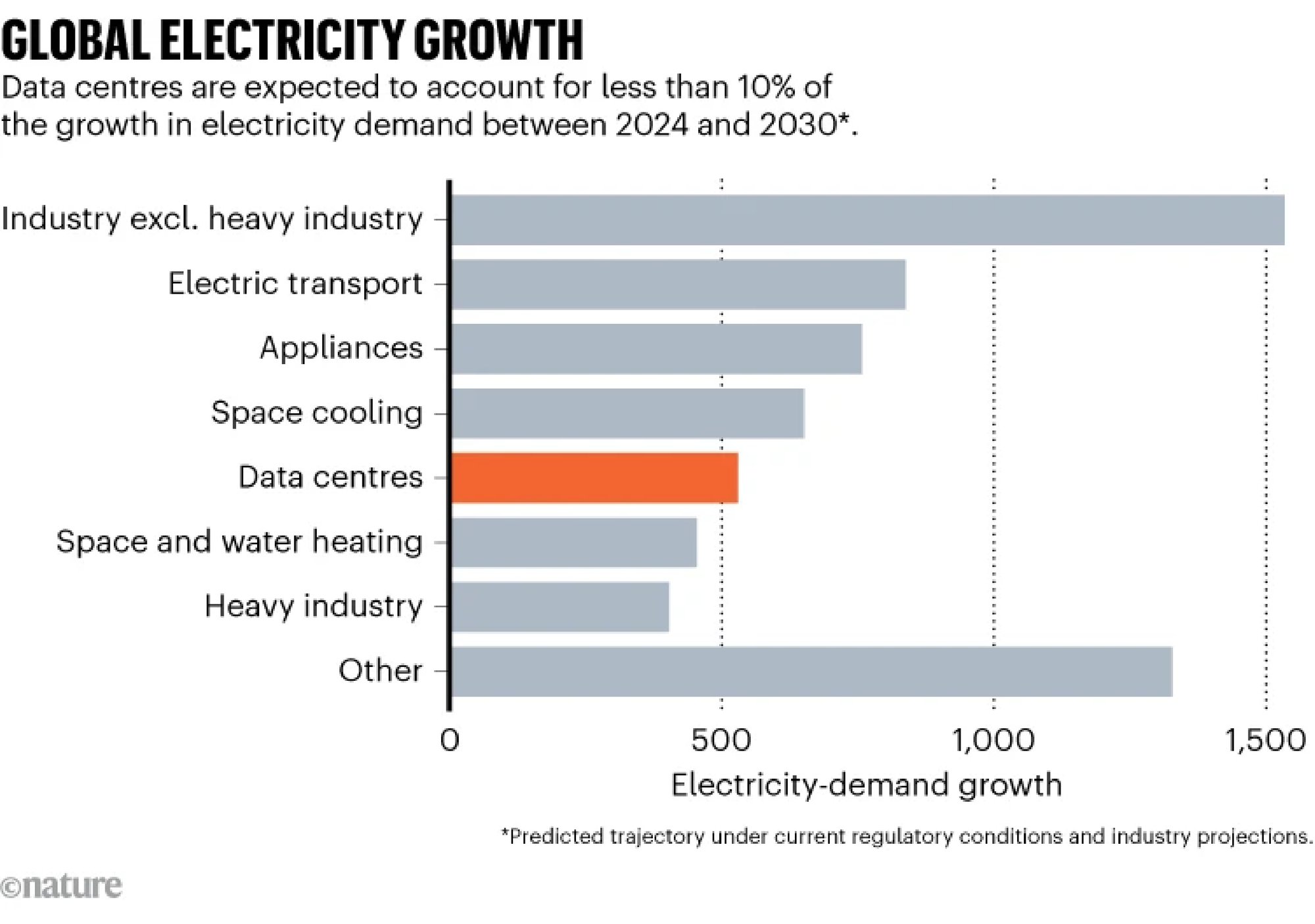

Here’s where things get complicated. All this computing power needs electricity – lots of it. Data centers used about 415 terawatt-hours of electricity globally in 2024. That’s roughly 1.5% of all electricity used worldwide. By 2030, experts think data centers will use 945 terawatt-hours – that’s like powering the entire country of Japan for a year.

In the United States alone, data centers used 176 terawatt-hours in 2023, which is 4.4% of all American electricity. The Department of Energy predicts this could jump to 6.7-12% by 2028. That’s a massive increase in just five years.

AI desperately needs more energy, yet even when energy exists (like wind farms in Scotland), it often cannot reach the data centers that need it most due to constrained transmission infrastructure.

This creates a vicious cycle:

This “energy Catch-22” means that unchecked compute growth risks slowing AI’s transformative potential and eroding public trust. The International Energy Agency (IEA) warns: global data center electricity demand could double by 2030, with AI as the driving factor

The situation gets worse when we look at AI-specific data centers. These facilities use much more power than regular data centers. According to the International Energy Agency, a typical AI data center uses as much electricity as 100,000 homes. The biggest ones being built today will use 20 times more than that.

Energy Consumption Reality:

Water: The Hidden Crisis

Electricity isn’t the only problem. Data centers also need massive amounts of water for cooling. All those compute servers get extremely hot, and companies use water to keep them from overheating.

The numbers are staggering. Global AI demand could require 4.2 to 6.6 billion cubic meters of water by 2027. To put that in perspective, that’s more than half of the United Kingdom’s total annual water usage. It’s also equivalent to the water consumption of 4-6 countries the size of Denmark.

Google alone used over 6 billion gallons of water in 2023 just to cool its data centers. That’s nearly 23 billion liters of water. And Google is just one company – imagine what happens when all the tech giants scale up their AI operations.

The water problem is especially serious because many data centers are built in areas that already face water shortages. California, Arizona, and other dry regions host many data centers, but they’re running out of water for their populations.

Water Usage Crisis:

Problem of Water Scarcity and Local Impact:

-

Many data centers are built in regions with high water stress or scarcity, leading to competition for water with local communities and agriculture.

-

This has caused protests and regulatory actions in different countries (e.g., Chile, Netherlands, US drought-prone states) due to concerns about water supply security.

-

The growing proliferation of water-intensive data centers exacerbates the water crisis, especially in drought-affected areas.

-

The challenge includes both direct water use for cooling and indirect water consumption related to electricity generation for data centers.

Why This Matters for Everyone

You might think this is just a tech industry problem, but it affects everyone. Here’s why:

Economic Impact: Countries and companies with the most computing power will dominate the global economy. Just like oil-rich countries became wealthy in the 20th century, compute-rich nations will rule the 21st century.

Job Creation: Data centers create thousands of jobs, from construction workers who build them to engineers who maintain them. Communities compete to attract these facilities because they bring good-paying jobs.

National Security: Governments now view computing power as critical for national security. Countries want to control their own data and AI capabilities rather than depending on other nations.

Innovation Speed: Companies with more computing power can develop new products faster. They can test ideas, run experiments, and launch services that smaller competitors can’t match.

Daily Life Impact: The apps on your phone, the websites you visit, and the AI tools you use all depend on these data centers. More computing power means better services for consumers.

Toward Smarter Infrastructure

The solution is not simply to build more data centers and expand the grid indefinitely. That approach is too slow, too expensive, and environmentally damaging. Instead, the future lies in building smarter infrastructure.

Recent studies suggest that GPU-heavy data centers designed specifically for AI workloads can provide grid flexibility at 50% lower cost compared to general-purpose centers, provided that workload scheduling is aligned with grid dynamics (arXiv). Adaptive scheduling can ensure that AI inference is concentrated during periods of renewable energy surplus. Dynamic throttling can reduce power draw during peak demand. Thermal-aware computing can minimize cooling requirements by aligning workloads with chip temperatures.

Georgia Tech researchers emphasize that efficiency must be designed across the entire stack, from silicon to software to power delivery systems (Washington Post). This is not a matter of tweaking one layer, but of reimagining the co-design of hardware, software, and energy infrastructure.

Decentralization as a Release Valve

One of the most promising developments is the rise of decentralized compute. Instead of concentrating workloads in hyperscale clusters, where grid strain is already most severe, decentralized platforms distribute AI jobs across globally dispersed nodes. These nodes can be enterprise GPUs, smaller data centers, or even idle consumer hardware, stitched together through decentralized physical infrastructure networks.

This model offers three critical advantages. First, it reduces grid bottlenecks by shifting demand away from overburdened hubs. Second, it unlocks latent supply, turning idle hardware into productive, yield-bearing assets. Third, it introduces market-driven efficiency, with workloads routed dynamically to the cheapest, most sustainable nodes available at any given time.

Spheron: Building Smarter Compute Infrastructure

Spheron Network is a leader in this decentralized approach. Its mission is to build the world’s largest community-powered compute stack for AI, Web3, and agent-based applications. By routing workloads across more than 44,000 nodes globally, Spheron avoids the pitfalls of hyperscale centralization while ensuring resilience and cost efficiency.

In regions like Asia-Pacific, where energy shortfalls already reach 15–25 gigawatts, Spheron can enable workloads to be routed to underutilized regions, alleviating local shortages. By providing up to 93% cost savings compared to AWS or Azure, Spheron not only makes compute more accessible but also ensures that efficiency is incentivized.

The network is powered by its native token, $SPON, which financializes compute by rewarding GPU providers and granting users discounted access. In doing so, Spheron transforms compute into a community-owned capital layer, one that mirrors the way oil companies once structured energy markets but with decentralization and sustainability at its core.

Conclusion: Compute as the Electricity of the AI Age

Compute has become the oil of the AI age. It is scarce, it is strategic, and it is shaping global capital flows. But unlike oil, compute offers the possibility of being cleaner, more efficient, and more distributed, if the right choices are made.

The rise of compute as an asset class is not just about investment vehicles, futures contracts, or GPU leases. It is about recognizing that the infrastructure of intelligence is now one of the most valuable and contested resources in the world. The challenge is to ensure that this asset class is built on smarter foundations, not just bigger ones.

Companies like Spheron are showing what this future can look like: community-powered, globally distributed, and aligned with both economic and environmental needs. The question is no longer whether AI will change the world. It already has. The real question is whether the world can sustain AI’s rise, and whether compute as an asset class will be remembered as a story of unchecked extraction, or as the foundation of a smarter, more resilient future.