We are excited to welcome DIN to the Spheron ecosystem. DIN is the first AI Agent blockchain, and it comes with a clear mission: build the core infrastructure for AI agents and decentralized AI applications. Spheron will power DIN with decentralized GPU compute, reliable runtime environments, and cost-efficient scaling so AI teams can ship agentic apps faster.

This announcement is more than a logo on a slide. It connects a purpose-built agent chain with a decentralized compute network designed for AI workloads. Together, we aim to make it simple for builders to deploy, scale, and monetize AI agents on open infrastructure.

Problem Statement: What Is Broken Today

Modern AI tools promise personalization and responsiveness, but they often fail in three major ways:

-

Privacy and Data Retention Risks: A U.S. court recently ordered OpenAI to preserve all ChatGPT conversation logs, including deleted ones, as part of a copyright lawsuit. This order forced the company to keep these logs indefinitely for Free, Pro, Plus, and Team tiers. Users cannot rely on deletions being permanent. Also, when so much user interaction is stored outside personal control, the risk of exposure or misuse grows. Sensitive personal or legal queries shared with AI chatbots may become part of public records or legal evidence.

-

Escalating Inference and Infrastructure Costs: Many teams building agentic or model-driven applications see inference costs (the cost to run models in real time) rise rapidly. Cloud GPU instances like NVIDIA’s H100 can cost over $30,000 per month. Public cloud providers often charge additional fees for bandwidth, storage, egress, or hidden latency overheads. These make scaling expensive and unpredictable.In many cases, inference becomes the major operating cost once a model is live. Even if training is expensive, running inference continuously or at a large scale dominates the total cost.

-

Lack of Ownership, Transparency, and Trust: Users rarely control where their data is stored, whether models are audited, or whether they share in the value their data helps generate. The “black box” nature of many AI systems means users cannot verify model behavior, cannot revoke access, and cannot inspect how decisions are made.

These gaps, privacy, cost, and trust limit AI’s ability to become truly useful and fair. They also slow adoption in sectors that need strict privacy (healthcare, legal, finance) and make it harder for developers and communities to build long-term, sustainable agent ecosystems.

Who DIN is and what they are building

DIN began as the Data Intelligence Network and has evolved into a full-stack platform for agents. The team has shipped real products across data analytics, AI agent UGC, and enterprise knowledge tools. They have raised capital from leading investors and have grown from early dashboards in the Polkadot ecosystem to a broader vision: build an AI Agent blockchain that treats data, people, and AI as coequal parts of one network.

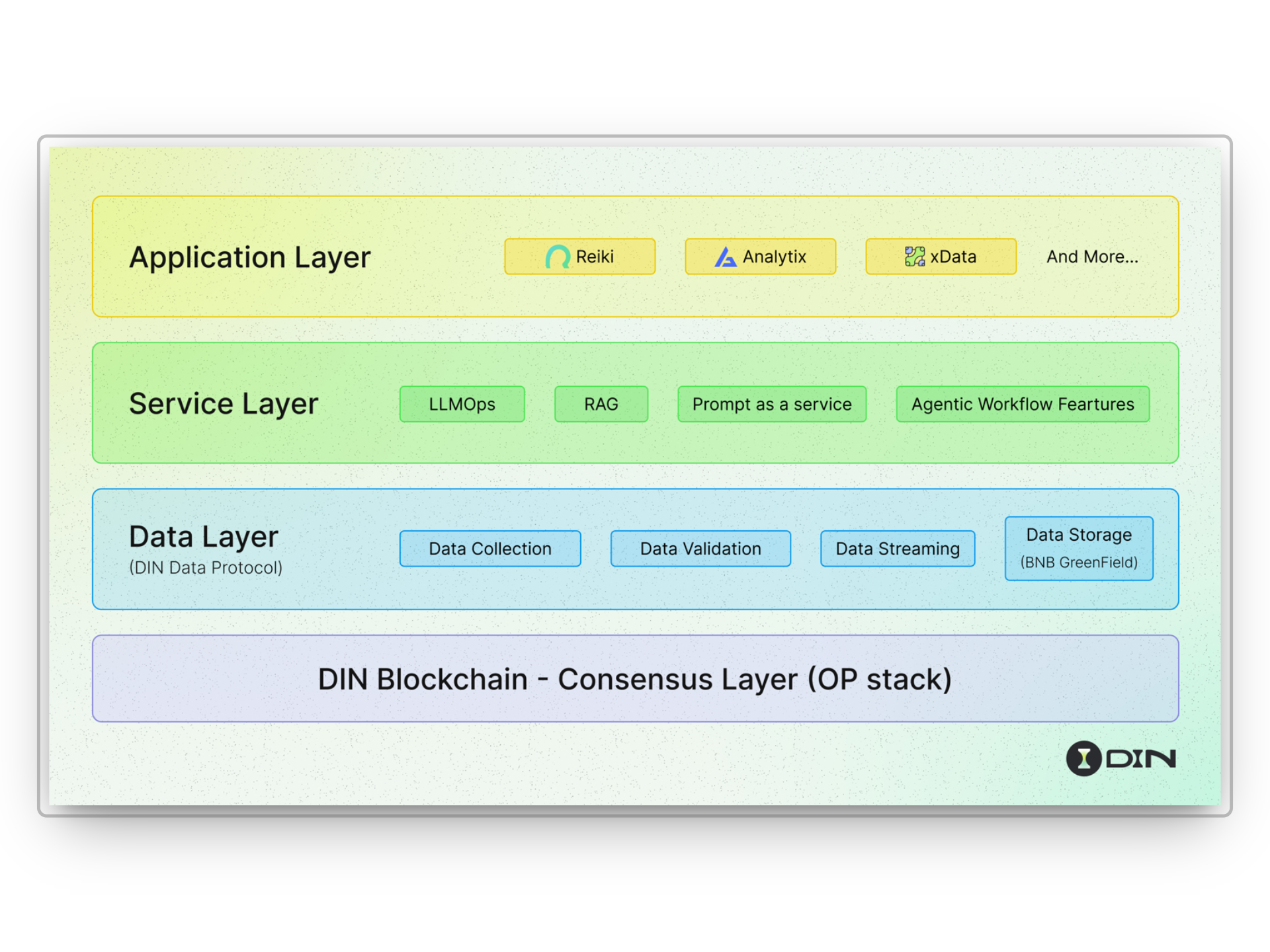

At a technical level, DIN organizes its chain in four layers: consensus, data, service, and application. The consensus layer anchors security and verifiable execution. The data layer ingests on-chain and off-chain data and prepares it for agent use through components like DIN Chipper Nodes, which validate, clean, and vectorize inputs. The service layer provides LLMOps, Prompt as a Service, Retrieval-Augmented Generation, and visual agent workflows. The application layer already includes shipped products such as Analytix, Reiki, and xData that demonstrate real usage across analytics, agent creation, and multilingual voice data.

The vision is straightforward. Agents will become the primary interface for users. Those agents need high-quality data, trustworthy execution, and the ability to collaborate. DIN wants to be the chain that standardizes those needs and turns them into a consistent developer experience.

Why this matters for AI builders

Agentic apps are moving from demos to production. Teams need three things to make that leap: reliable compute at sane prices, a data plane that agents can trust, and programmable workflows that scale from one agent to many. DIN brings the data and workflow layers that are specific to agents. Spheron brings decentralized GPU capacity, bare-metal performance, and global distribution so inference and training can scale without being locked to a single cloud.

The result is a cleaner path to market. A team can build an agent on DIN, wire in its data sources, and run inference on Spheron. The agent can reason, retrieve, act, and collaborate using DIN’s service layer while relying on Spheron to deliver low-latency, high-throughput compute.

What Spheron provides to DIN

Spheron aggregates GPUs and CPUs from a global network of providers and data centers. We expose that capacity as full VMs and high-performance runtimes that work for model serving, batch inference, RAG pipelines, and fine-tuning. For DIN and its builders, this means:

-

Decentralized GPU compute that avoids single-vendor lock-in

-

High-efficiency infra for agents, including persistent storage and fast networking

-

Cost-effective scaling for production workloads and spikes in demand

Spheron’s platform also supports practical developer needs: container images, SSH access, and autoscaling hooks. Teams can start small, test agents in staging, and scale to production without changing providers or architectures.

What developers and communities can expect

Better price-performance for inference. Spheron’s decentralized marketplace helps teams run agents with lower operating costs. That makes it viable to serve more users, keep latencies low, and experiment with larger context windows or ensembles.

Cleaner data and safer execution. DIN’s data layer and workflow tools help agents consume structured and unstructured inputs with validation and vectorization. This improves retrieval quality and reduces brittle behavior.

A path to multi-agent systems. DIN’s Agentic Workflow and RAG support collaboration between agents. Spheron’s horizontal scale lets teams deploy multiple models and tool-using agents without rewriting infra.

Easier go-to-market. DIN’s application layer has already seen traction with products like Reiki and Analytix. Spheron’s compute supply shortens the distance from prototype to production and allows community growth without infrastructure stress.

Moving Forward

With this partnership, we mark a turning point. The infrastructure for intelligent agents, high-quality data, on-chain accountability, and scalable compute is now assembling in one stack.

In conclusion, the union of DIN’s agent-first blockchain and Spheron’s decentralized compute fabric creates something that neither could achieve alone. We now have a platform where AI agents can be built with real trust, real ownership, and real scale. If you are building an agent, analyzing data, or just curious about how AI can serve users, not extract from them, now is the time to act.

If you are building agents, this is your moment. Deploy your agent logic on DIN, run inference on Spheron, and give users a faster, cheaper, and more transparent experience. You get programmable data flows, verifiable workflows, and scalable compute without central points of failure.

Start here:

The infra layer for intelligent agents is here. Built with DIN and Spheron, and take your AI from demo to a durable product.