Every millisecond matters. When a user loads a webpage, advertisers enter a silent auction that completes in under 100 milliseconds. The platform that responds faster wins the impression. The one that’s late loses revenue and trust.

This is real-time bidding (RTB), and it’s unforgiving.

For AdTech platforms handling 100 billion auction requests daily, the infrastructure choice between bare metal and public cloud isn’t academic; it directly impacts win rates, revenue, and competitiveness. As latency-sensitive workloads increasingly demand AI-powered inference and real-time model training, the case for dedicated, optimized infrastructure has become impossible to ignore.

The RTB Gauntlet: Racing Against the Clock

Real-time bidding is one of the most demanding computing challenges in the modern digital economy. Exchanges expect end-to-end responses within roughly 100 milliseconds, but after accounting for network latency between the exchange and your DSP, the actual processing window shrinks to a razor-thin 50–80 milliseconds. If your system is even 50 milliseconds too slow, you lose that impression to a faster competitor.

The infrastructure supporting this operates at a mind-bending scale. Major ad exchanges process over 500,000 auctions per second during peak hours, with some handling upwards of 600 billion bid requests daily. During peak shopping seasons and sporting events, these volumes explode even further.

The latency budget allocation reveals why infrastructure choice matters so dramatically:

RTB Latency Budget Allocation: How 100ms Deadline is Distributed

In bare metal environments, that overhead buffer shrinks substantially, leaving more time for actual intelligent bidding decisions. In cloud VMs, virtualization overhead consumes 30–45% of your available processing window, the time you can’t get back.

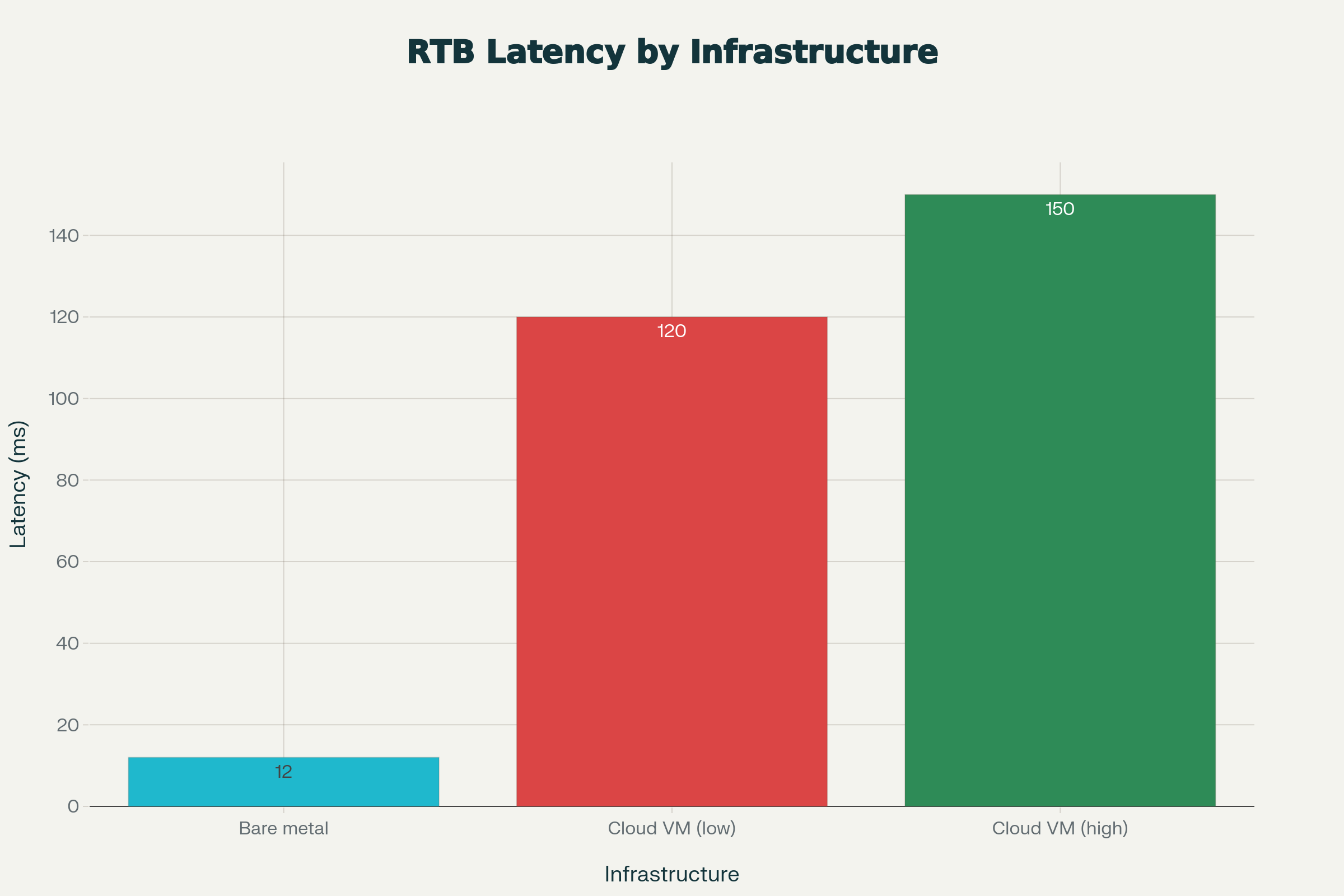

When companies actually measure performance head-to-head, the results are striking. Bare metal configurations consistently deliver sub-100ms P99 latencies, with average response times around 12 milliseconds. Cloud VMs typically land in the 120–150ms range, a 30–40 millisecond gap that’s often the difference between winning and losing an auction.

One blockchain infrastructure provider ran a direct comparison test under identical conditions: same city, same bandwidth, same workload. The cloud node started at 28ms, but once real users hit the system, latency spiked to 150ms. The bare metal node stayed at a consistent 12ms, even under full load.

Why does this happen? Virtualization overhead bleeds performance across every resource type:

Virtualization Overhead by Resource Type: The Hidden Cost of Cloud VMs

-

CPU overhead: 9–12% on modern hypervisors (Hyper-V), 17% on Intel virtualization, up to 38% on AMD

-

Memory overhead: ~12% due to hypervisor memory management and per-VM allocations

-

Disk I/O: 6–8% overhead from emulated storage layers

-

Network I/O: 15–25% overhead from virtual network interfaces and packet processing

These costs cascade. When processing decisions must be completed in milliseconds, every percentage point of overhead can push you over the latency cliff.

Real-Time Bidding at Its Core

DSPs (Demand-Side Platforms) are the engines that power RTB, operating under brutal constraints. They must handle millions of ad requests per second while parsing requests, fetching user profiles, running complex pricing algorithms, and generating responses with creative data and tracking pixels, all within 50–80 milliseconds.

A production RTB system typically allocates 10–20 milliseconds for the actual decision-making phase, leaving precious little margin for error. Research on leading DSPs shows bid response times average between 5–20 milliseconds depending on targeting complexity but degrade by up to 35% during peak traffic periods in under-optimized systems. One major Chinese DSP reduced average response times from 23ms to 9ms purely through algorithmic improvements without any hardware upgrade.

Building the Right Infrastructure for Each AdTech Component

Minimum Hardware Specifications for AdTech Platform Components

Demand-Side Platforms (DSPs) need high-frequency, multi-core processors optimized for raw speed: 24–64 high-frequency cores (favoring clock speed over core count), 256–512GB RAM for maintaining user profiles and campaign data in memory, 10–25 GbE minimum network bandwidth, and 1–2TB NVMe storage for warm data and telemetry.

Supply-Side Platforms (SSPs) manage billions of impressions daily and prioritize throughput and concurrency: 24–48 cores with strong per-core performance, 256GB to 1TB RAM for publisher inventory catalogs and yield optimization, 25–100 GbE for handling millions of concurrent bid requests, and hybrid storage approaches with fast SSDs for active inventory and large HDDs for historical data.

Data Management Platforms (DMPs) emphasize data processing and bulk analytics: 12–24 cores with support for advanced instruction sets (AVX-512), 256GB to 2TB RAM depending on dataset size and segmentation needs, 10–40 GbE network bandwidth where throughput matters more than ultra-low latency, and massive tiered capacity with NVMe for active segments and 100TB+ HDD arrays for historical data.

Ad Exchanges orchestrate auctions in real-time and demand absolute consistency: 32–64 cores at the highest available clock speeds (potentially across single-socket servers to minimize NUMA effects), 512GB to 2TB RAM for maintaining state on millions of concurrent auctions, 25/50/100 GbE+ with direct peering to major DSPs and SSPs, and all-flash storage for operational data with hottest keys in memory.

The Optimization Breakthrough: From 29ms to 5ms

Real-time bidding has experienced dramatic performance improvements through infrastructure optimization and architectural innovation. Research demonstrates that moving from traditional architectures to optimized pipelined processing can reduce average latency from 29 milliseconds down to just 8 milliseconds, a 72% improvement. When combined with Kafka KIP-500 architecture for distributed stream processing, systems can achieve sub-5 millisecond latencies.

RTB Processing Optimization Improvements: Path to Sub-10ms Latency

Key optimization gains include:

-

Pipelined processing architectures: 72% latency reduction (29ms → 8ms)

-

Kafka KIP-500 architecture: 83% latency reduction, with infrastructure costs dropping 44% while throughput capacity increases 2.7x

-

Multi-level caching strategies: 65–80% reduction in data access times, with 47% lower average bid processing times during high-traffic periods through smart prefetching algorithms, achieving 76.3% cache hit rates

These optimizations compound when running on bare metal infrastructure without virtualization overhead stealing cycles.

The “Noisy Neighbor” Tax: Why Shared Resources Fail AdTech

Cloud environments introduce a hidden performance killer: the “noisy neighbor” effect. When other tenants on your shared physical host consume I/O bandwidth, network capacity, or CPU cycles, your virtual machine suffers collateral damage.

A single VM doing heavy database backups can saturate the I/O bandwidth of the entire shared storage array, forcing all other VMs to wait. A neighbor running aggressive network operations can saturate the physical NIC, causing packet loss and increased latency for everyone else.

For AdTech, this is catastrophic. When milliseconds determine winners and losers, you can’t afford performance variability caused by unknown workloads running on the same physical hardware. Bare metal eliminates this problem entirely; your servers don’t share resources with competitors, and your performance stays consistent, predictable, and repeatable.

Real-World Impact: The Numbers That Matter

Companies making the switch to dedicated infrastructure report stunning efficiency gains. spheron.ai customers report up to 86% lower compute costs and 3× better system performance compared with virtualized deployments. Neon Labs achieved real-time response targets while cutting cloud costs by 60%. One optimization case study (PowerLinks) reduced infrastructure spending from $200,000/month to $10,000/month, a 20× improvement, without sacrificing performance.

The Trade Desk, one of the largest DSPs globally, spent $264 million on platform operations during the first nine months of 2023, roughly $730,000 per day on infrastructure alone. This demonstrates the scale at which serious AdTech platforms operate. That kind of scale means infrastructure decisions compound: a 5% performance improvement across millions of daily auctions translates to millions in recovered revenue, while a 20% cost reduction directly improves margins.

For small, early-stage AdTech platforms, the cloud offers speed and flexibility; you can spin up capacity instantly without hardware lead times, and you only pay for what you use. This works in year one.

But starting in year two, the economics flip. Bare metal infrastructure, with its higher upfront costs, amortizes across stable, predictable workloads. By year three, the total cost of ownership advantage becomes undeniable. By year five, bare metal can deliver 20–50% cost savings.

The crossover point typically occurs when traffic stabilizes (not constantly spiking). You can predict resource utilization 3–6 months forward, your DSP/SSP platform handles millions of daily impressions, and performance consistency matters more than elastic scaling. For serious AdTech operations, this threshold arrives quickly.

The Hidden Costs of Virtualization

Cloud adoption creates upstream complexity and cost that rarely appear on initial bills. The virtualization stack itself, hypervisor software licensing, management platforms (vCenter, Kubernetes control planes), and monitoring systems, add up fast. Enterprise-grade virtualized environments require sophisticated resource management and active optimization to avoid wasting capacity.

Cloud teams must over-provision resources to buffer against the unpredictable performance degradation of noisy neighbors. This “insurance cost” gets baked into monthly bills as unused capacity sitting idle to handle worst-case scenarios. Additionally, skills required to debug performance issues in virtualized environments are specialized and expensive. When latency problems emerge, distinguishing between application bugs, virtualization overhead, and noisy neighbor effects requires expertise that can take weeks to develop.

Building the Right Hybrid Strategy

Sophisticated AdTech companies don’t treat this as either-or. Instead, they build hybrid strategies:

On Bare Metal (Latency-Critical Hot Paths):

-

Real-time bidding decision engines

-

User profile cache and feature serving

-

Ad exchange transaction processing

-

On-host monitoring and profiling tools

-

Low-latency network infrastructure

On Cloud (Flexible, Burst Workloads):

-

ML model training on large datasets

-

Data warehouse and analytics pipelines

-

CI/CD pipelines and testing infrastructure

-

Batch reporting and aggregation jobs

-

Development and staging environments

This approach gives you the raw performance and deterministic latency where milliseconds decide outcomes, plus the flexibility and scale of the cloud, where absolute speed isn’t the primary constraint.

The Future Demands Decisiveness

Looking ahead, AdTech infrastructure faces mounting pressure. Generative creative optimization and dynamic model-driven bidding are becoming mainstream, making GPU compute and low-latency inference pipelines foundational rather than optional. Global energy prices remain volatile due to geopolitical tensions, and since AdTech must maintain speed and scale 24/7, unlike industries that can dial back operations, the efficiency of bare metal becomes increasingly attractive as cloud energy costs get passed to customers.

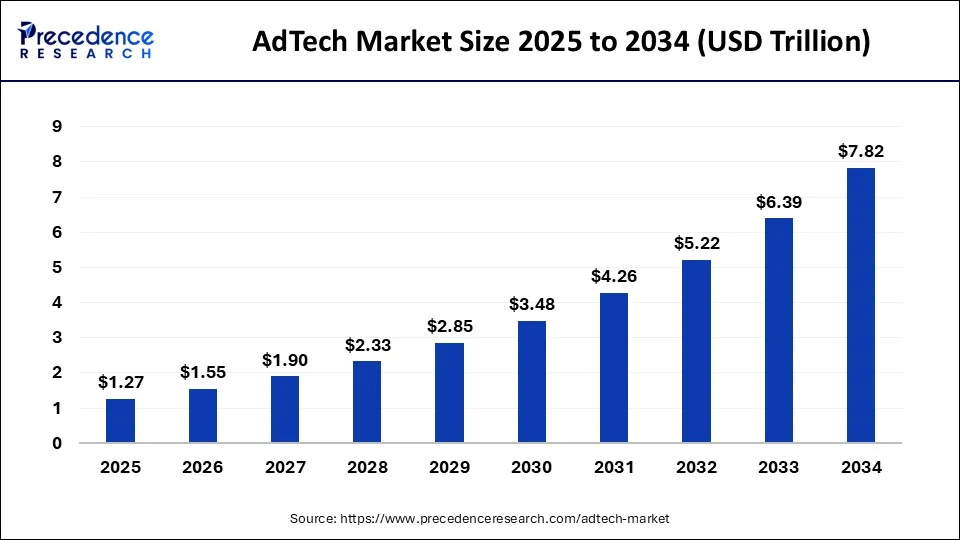

With cookie deprecation and privacy regulations tightening, platforms are moving to first-party identity solutions and real-time data consolidation, requiring infrastructure that offers full control and isolation, something bare metal provides natively. The global AdTech market is projected to grow from $1.04 trillion in 2024 to $7.82 trillion by 2034 at a 22.35% CAGR, explosive growth that will require infrastructure scaling efficiently, where bare metal wins on both performance and unit economics at scale.

The Decision Point: Speed Versus Flexibility

The fundamental trade-off in infrastructure choice comes down to: Do you optimize for speed or for flexibility? For early-stage platforms still finding product-market fit, cloud makes sense. You can pivot, experiment, and scale elastically, and overhead costs are acceptable when infrastructure expenses are modest.

But for platforms that have stabilized and grown, operate at predictable scale, and where latency directly impacts revenue, the decision should be clear: bare metal is the superior choice.

Here’s why:

Predictable Performance: No virtualization overhead means consistent response times. Your win rate doesn’t depend on what some other customer is doing on the same physical hardware.

Customization and Control: Kernel tuning, network stack optimization, specialized monitoring tools, and custom security configurations all become possible. You can optimize for your exact workload, not a generic VM template.

Powerful Hardware: Modern dedicated servers ship with high-frequency CPUs, massive RAM (often 256GB–1TB+), NVMe SSD storage, and 10/25/50/100 GbE networking, hardware purpose-built for the demands of real-time bidding.

Long-Term Economics: For stable workloads, bare metal delivers lower per-transaction costs and better predictable budgeting than monthly cloud bills that escalate with scale.

Competitive Advantage: In a business measured in milliseconds, a 30–50 millisecond latency advantage translates to more wins, higher revenue, and more market share. The competitors who invested in the right infrastructure first will maintain that edge.

The Call: Invest Now or Regret Later

The AdTech industry doesn’t reward complacency. Platforms that made the infrastructure investment five years ago are now operating at 2–3× the efficiency of those still relying on the cloud. The companies making the right choice today will be the market leaders of tomorrow.

Bare metal infrastructure isn’t a trendy technical detail; it’s the foundational strategy that separates winning platforms from commodity players in a business where every millisecond translates to revenue.

For any AdTech platform serious about scale, predictability, and performance, bare metal infrastructure isn’t just better. It’s essential.