The artificial intelligence revolution has created an unprecedented paradox. While breakthrough AI models and applications multiply at an exponential rate, access to the fundamental computational infrastructure required to build them remains stubbornly concentrated among well-capitalized enterprises. A single NVIDIA H100 GPU commands upwards of $27,500, and an 8-GPU training server can exceed a quarter-million dollars before factoring in data center infrastructure, cooling systems, and specialized IT expertise. For startups, academic researchers, independent developers, and mid-market companies, these capital requirements have traditionally represented an insurmountable barrier to entry.

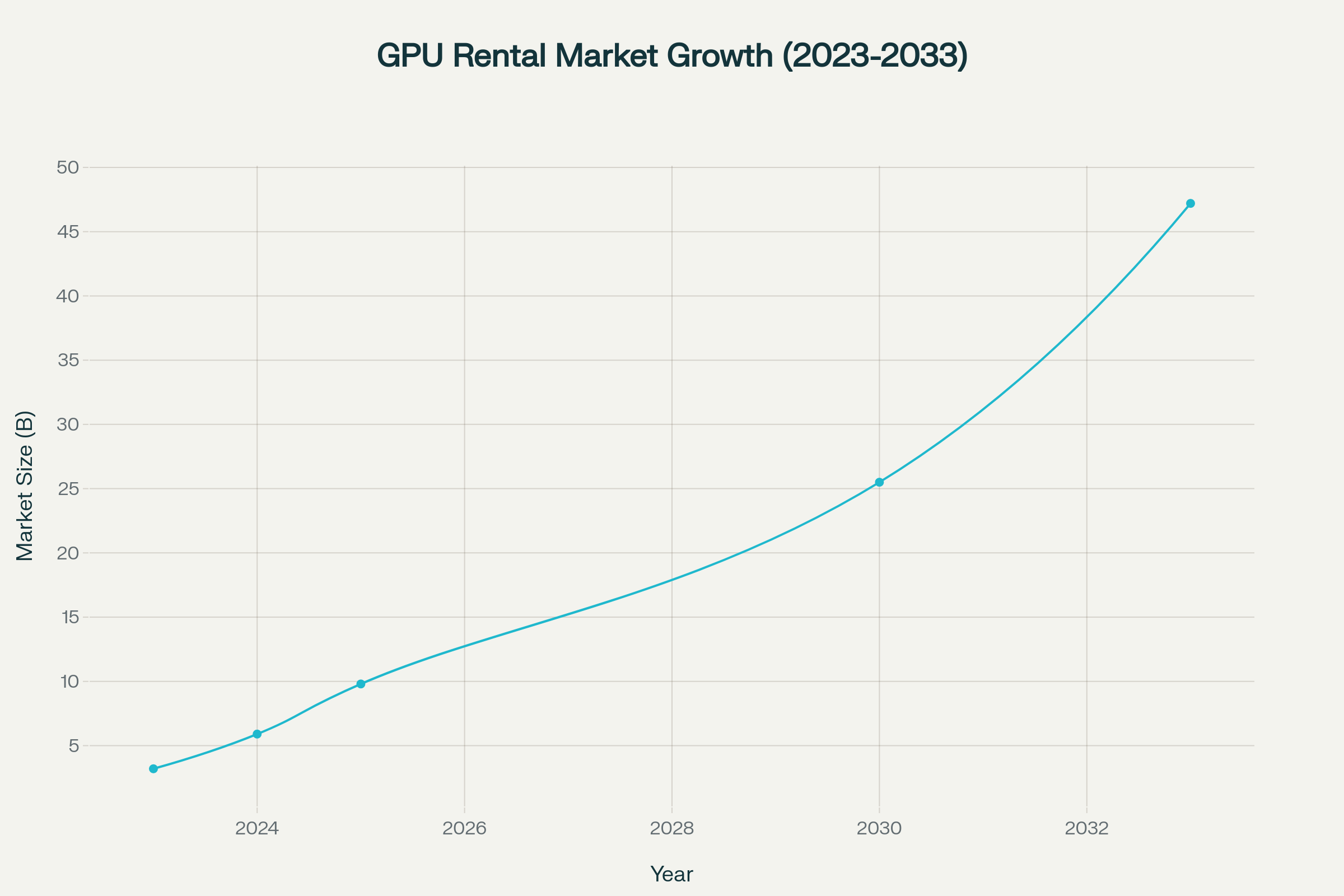

The GPU rental and reservation marketplace has emerged as the defining solution to this accessibility crisis. Rather than requiring massive upfront capital expenditures, these platforms allow organizations to access enterprise-grade computational power through flexible, on-demand rental models. The transformation is both rapid and comprehensive: the global GPU rental market has expanded from $3.2 billion in 2023 to a projected $9.8 billion in 2025, and analysts forecast it will reach $47.2 billion by 2033, representing nearly fifteen-fold growth in a single decade.

This explosive expansion reflects a fundamental shift in how computational infrastructure is provisioned and consumed. Cloud GPU rental is no longer an alternative approach for budget-conscious users but rather the default, intelligent choice for organizations at every scale.

The Economic Architecture of the Shift: Why Rental Models Have Won

The ascendance of GPU rental marketplaces is driven by compelling economic advantages that extend far beyond simple cost reduction. The traditional ownership model saddles organizations with a cascade of hidden expenses and operational complexities that rental platforms eliminate entirely.

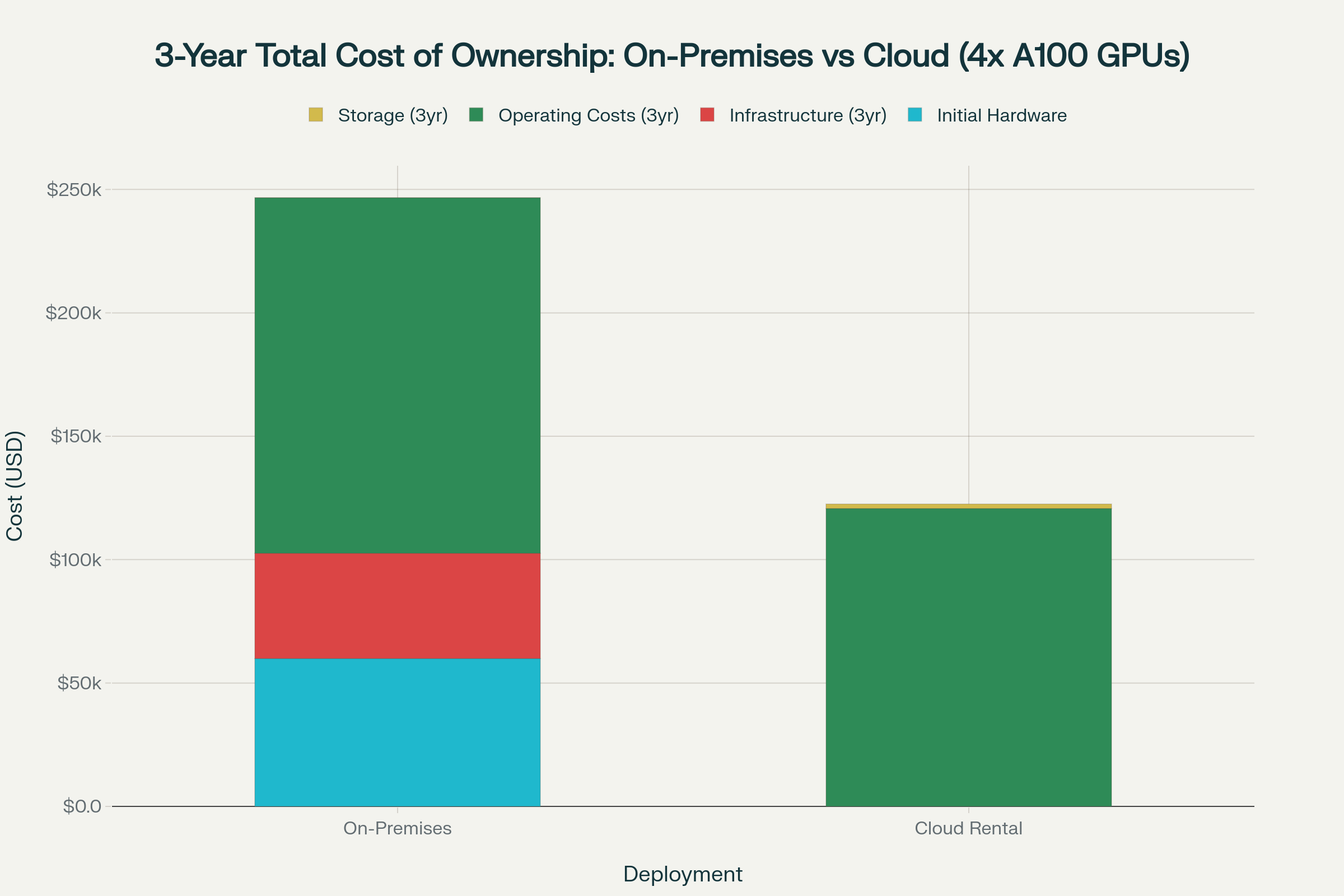

When examining the total cost of ownership over a three-year period for a medium-scale deployment of four NVIDIA A100 GPUs, the financial gap is stark. On-premises infrastructure demands $60,000 in initial hardware purchases, $42,624 in power and cooling infrastructure, and $144,000 in ongoing operational costs including system administration and maintenance. The three-year total reaches $246,624. Cloud rental for equivalent computational capacity totals $122,478 over the same period, delivering $124,146 in savings, a cost reduction exceeding fifty percent.

Cloud GPU rental delivers 50.3% cost savings over on-premises infrastructure in a 3-year total cost of ownership analysis

The break-even analysis reveals even more nuanced decision-making criteria. For an NVIDIA H100 GPU with a purchase cost of $27,500 and average rental rate of $2.85 per hour, continuous usage for approximately 13.4 months represents the financial breakpoint where ownership becomes more economical than rental. For an A100 at $12,000 and $1.64 hourly, the threshold is 10.2 months. For most AI development workflows characterized by intensive bursts of training followed by extended periods of lighter inference loads or inactivity, sustained utilization patterns rarely approach these thresholds.

Beyond direct cost comparisons, rental models eliminate depreciation risk entirely. GPU technology advances at a relentless pace, with new architectures arriving approximately every eighteen to twenty-four months. NVIDIA’s transition from Ampere (A100) to Hopper (H100) delivered transformational performance improvements, and the upcoming Blackwell architecture promises another generational leap. Organizations that purchased A100 hardware in 2022 now face the prospect of working with technology that, while still capable, lags meaningfully behind current state-of-the-art capabilities. Rental platforms absorb this obsolescence risk, allowing users to seamlessly migrate to newer hardware as it becomes available.

The hidden operational burdens of ownership compound these challenges. High-performance GPUs consume between 400 and 700 watts under load, with an eight-GPU server drawing several kilowatts continuously. The resulting electricity costs are substantial, but the cooling requirements are even more demanding. Data center-grade HVAC systems capable of dissipating this thermal output represent both significant capital investments and ongoing operational expenses. Organizations must also maintain dedicated IT staff with specialized expertise in GPU cluster management, an expensive and increasingly scarce talent pool.

The Supply-Demand Imbalance: Understanding the 2025 GPU Shortage

The GPU rental marketplace has gained urgency from persistent supply constraints that continue to define the semiconductor landscape in 2025. Despite improvements in broader chip availability since the acute shortages of 2021-2022, advanced AI GPUs remain exceptionally difficult to source through traditional purchase channels.

The shortage stems from intersecting demand and supply factors that create what industry observers characterize as a “perfect storm” for GPU scarcity. On the demand side, the AI workload management market alone is projected to expand from $45 billion in 2025 to $866 billion by 2035, reflecting a compound annual growth rate of 34.4%. This explosive growth is distributed across multiple customer segments, all competing for the same limited GPU inventory.

Hyperscale cloud providers including Amazon, Microsoft, and Google have committed unprecedented capital to AI infrastructure expansion. Microsoft alone plans to invest $80 billion in AI data centers by 2025, while Amazon has allocated $86 billion for similar infrastructure buildouts. These tech giants are simultaneously the largest purchasers of high-end GPUs and, through their cloud platforms, the largest resellers of GPU capacity to enterprise customers.

AI-native startups building generative AI services represent a second major demand source, often willing to pay premium prices to secure hardware access that could determine competitive positioning in rapidly evolving markets. Traditional enterprises experimenting with on-premises AI deployments for data privacy, security, or latency-sensitive applications constitute a third segment. Remarkably, even small businesses and individual prosumers are now entering the market for AI-optimized hardware, further compounding strain on limited supply.

Perhaps most significantly, NVIDIA’s dominance of the AI GPU market creates concentrated dependency on a single supplier. The company’s CUDA software ecosystem and specialized tensor core architectures offer performance advantages that competitors struggle to match, resulting in NVIDIA capturing an estimated sixty percent of chip production allocation to enterprise AI clients in the first quarter of 2025. Industry analysts project these supply constraints will persist through at least 2026, with some forecasting continued shortages into 2027.

Global Growth Trajectories: Regional Patterns in GPU Rental Adoption

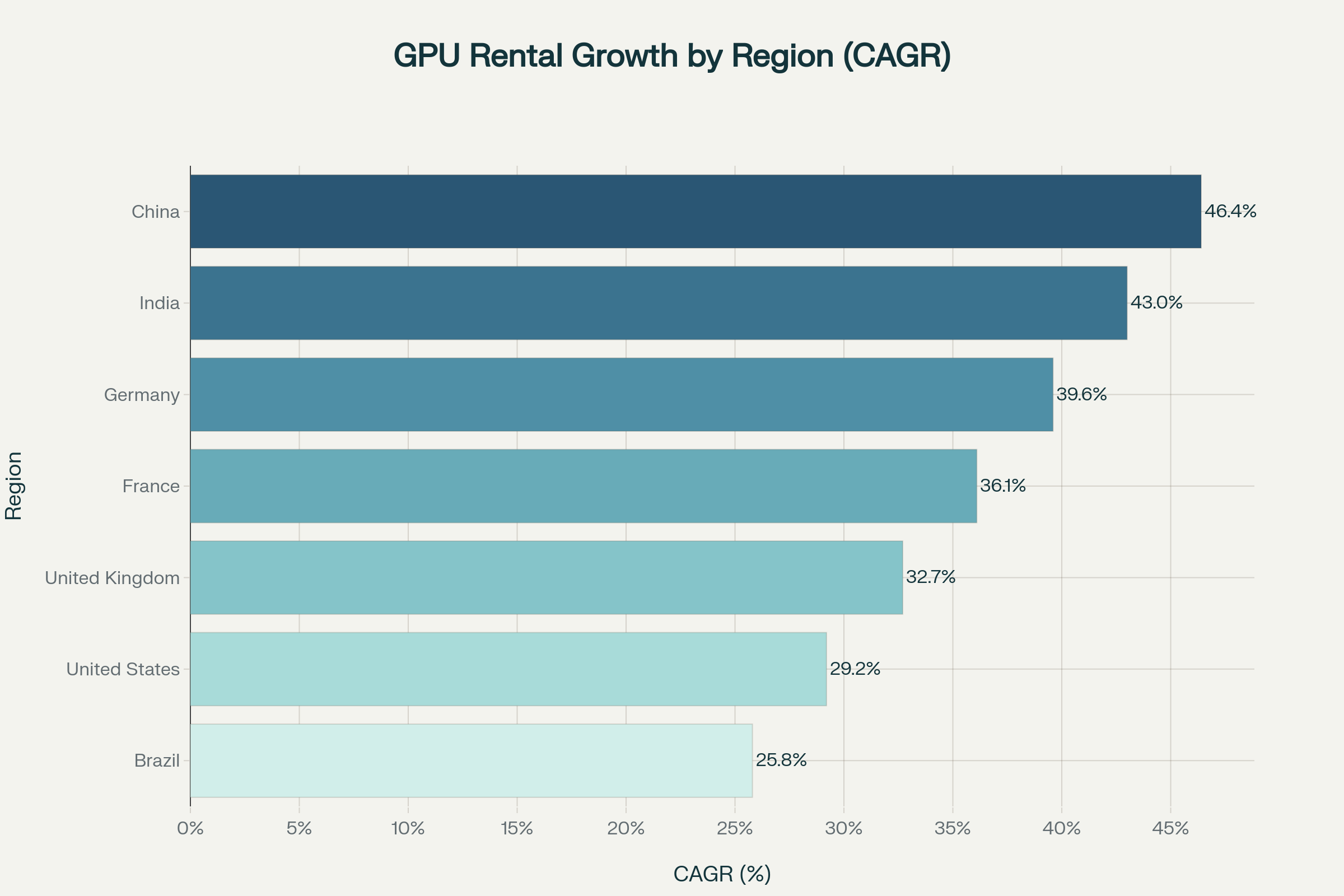

The expansion of GPU rental markets exhibits striking geographic variation, with Asia-Pacific regions demonstrating the most explosive growth rates while North America maintains market leadership in absolute terms.

China leads global growth projections with a staggering 46.4% compound annual growth rate through 2035, driven by the rapid expansion of cloud computing infrastructure and government-backed AI development initiatives. The country’s emphasis on digital transformation, smart city development, and AI-driven industrial applications creates massive computational demand that GPU rental platforms are uniquely positioned to fulfill. India follows closely with a 43% CAGR, propelled by widespread digitalization across IT, telecommunications, and financial services sectors, combined with a burgeoning startup ecosystem focused on AI applications.

Asia-Pacific markets, led by China and India, are experiencing the fastest GPU rental market growth rates globally through 2035

European markets demonstrate robust but more moderate growth, with Germany projecting a 39.6% CAGR, France at 36.1%, and the United Kingdom at 32.7%. These markets benefit from advanced industrial bases, strict data governance frameworks that favor sovereign cloud infrastructure, and substantial research institutions driving AI innovation. The United States, despite its technological sophistication and early-mover advantage in cloud computing, shows a more mature 29.2% growth rate reflecting an already-developed market with higher baseline adoption.

North America maintained the largest regional market share in 2024, accounting for approximately $1.3 billion of global GPU rental revenue. This dominance stems from the concentration of major technology companies, robust cloud infrastructure, and high AI adoption rates across industries. However, the faster growth trajectories in Asia-Pacific suggest a gradual rebalancing of market distribution over the coming decade.

The Marketplace Ecosystem: Platform Differentiation and Competitive Dynamics

The GPU rental landscape has evolved into a diverse ecosystem serving distinct customer segments through differentiated business models. Understanding these variations is essential for organizations seeking to optimize their infrastructure choices.

-

True marketplace platforms like Spheron.ai operate fundamentally different models than traditional cloud providers. Rather than owning and operating their own GPU infrastructure, the platforms aggregate capacity from multiple providers, creating competitive environments where multiple suppliers bid for customer business. This structural approach delivers several advantages. First, the competition among providers naturally drives prices downward, with marketplace platforms typically offering rates 50-80% below major public cloud providers for equivalent hardware. Second, the diversity of providers creates broader geographic distribution and more varied hardware configurations than any single operator could economically provide.

The platform’s minimum commitment is nothing and minimum allocation of a single GPU deliberately lowers barriers to entry, making high-performance computing accessible to individual developers and small teams operating on constrained budgets..

-

Vast.ai GPU marketplace model and maintains some of the good pricing available, This affordability comes with tradeoffs: the platform’s reliance on individual, non-professional hosts can introduce variability in reliability, and the user experience requires more technical sophistication than managed services. For budget-conscious developers comfortable with hands-on infrastructure management, Vast.ai represents an exceptional value proposition.

-

Specialized AI cloud providers like RunPod and Lambda Labs occupy a middle tier, offering curated hardware selections with varying degrees of management services. RunPod has gained particular traction in the generative AI and creative communities through its dual offering of on-demand pods and serverless GPU functions. The platform’s pay-per-second billing, fast cold-start times, and integrated development tools including SSH and VS Code tunnels create an optimized experience for AI developers. RunPod’s A100 80GB pricing at $1.74 per hour positions it competitively against both marketplace platforms and traditional clouds.

-

Lambda Labs focuses exclusively on AI workloads with specialized infrastructure including high-speed NVLink and InfiniBand interconnects essential for distributed training across multi-GPU clusters. Early access to latest-generation NVIDIA hardware and pre-installed machine learning frameworks deliver value for teams prioritizing bleeding-edge performance over absolute cost minimization. However, Lambda’s minimum one-month commitments and starting prices of $2.49 per hour for H100 access make it less suitable for short-duration experiments or intermittent usage patterns.

-

Public cloud giants AWS, Google Cloud, and Microsoft Azure offer GPU instances integrated within their comprehensive cloud ecosystems. This integration creates value for organizations already standardized on these platforms or requiring tight coupling between GPU compute and other cloud services including managed databases, object storage, and serverless functions. However, this convenience comes at a significant price premium, with H100 instances often exceeding $8.50 per hour and A100 instances around $4.20 per hour, roughly double the cost of specialized providers and quadruple marketplace platforms. Complex pricing structures, opaque availability, and frequent capacity constraints further complicate these offerings.

Adoption Patterns: Who’s Renting GPUs and Why

The democratization of GPU access through rental marketplaces has enabled distinct user segments to participate in compute-intensive workloads previously beyond their reach.

Small and medium-sized businesses represent perhaps the most transformative beneficiary cohort. Recent surveys indicate that 53% of SMBs now utilize AI in some capacity, with an additional 29% planning adoption in the near term. These organizations report AI delivering the highest impact in IT operations, finance, and human resources functions. Cloud GPUs eliminate the capital barriers that would otherwise exclude SMBs from AI adoption, with pay-as-you-go models allowing experimentation and iteration without upfront hardware commitments. A Salesforce survey found that 91% of SMBs using AI reported revenue growth, demonstrating tangible business outcomes from affordable infrastructure access.

Academic researchers and university laboratories face perpetual budget constraints that make GPU rental particularly attractive. When institutional computing clusters become oversubscribed, a common occurrence as AI research proliferates across disciplines, researchers can provision cloud GPUs to maintain project timelines rather than waiting months for local resource availability. The ability to rent specialized hardware for specific experimental phases, then release it when no longer needed, dramatically improves capital efficiency for grant-funded research.

AI startups building commercial applications face intense pressure to iterate rapidly while preserving limited venture funding for product development and market entry rather than infrastructure acquisition. The flexibility to scale from a single GPU during prototyping to multi-GPU clusters for production training runs, then back down to lean inference infrastructure, matches the highly variable resource requirements of startup development cycles. Organizations that secure efficient GPU access gain meaningful competitive advantages over peers constrained by hardware limitations in fast-moving AI markets.

Freelance technical professionals including 3D artists, visual effects specialists, and independent AI developers utilize rental GPUs to handle client projects with intensive rendering or compute requirements. The ability to provision powerful hardware for project-specific durations, billing clients for computational costs as project expenses, transforms the economic viability of independent practice in these technical domains.

Looking Forward: The Trajectory of Compute Democratization

The GPU rental marketplace has matured from an experimental alternative to the default infrastructure model for organizations across the spectrum from individual developers to enterprise AI teams. Several trends will shape the evolution of this ecosystem through the remainder of the decade.

Continued supply constraints through 2026-2027 will maintain premium pricing and capacity pressures for latest-generation hardware, reinforcing the value proposition of rental platforms that can aggregate and efficiently allocate scarce resources. As manufacturing capacity gradually expands and newer architectures displace current flagship products, rental rates for mature hardware including A100 and early H100 generations should moderate further, improving accessibility.

The marketplace model pioneered by platforms like Spheron AI is likely to capture increasing market share from traditional cloud providers as price-conscious customers discover the substantial cost savings available through competitive provider ecosystems. Platform features including filtering, multiple provider reliability, reserved gpus option, startup script support, and flexible commitment terms will continue differentiating marketplace leaders from commodity capacity aggregators.

Emerging regions, particularly across Asia-Pacific, will see the most dramatic expansion in both GPU rental supply and demand as local cloud providers invest in AI infrastructure and regional enterprises accelerate digital transformation initiatives. This geographic diversification will improve latency and data sovereignty options for global applications while intensifying competitive pressure on pricing.

The compute power that once resided exclusively in the data centers of technology giants is now accessible to anyone with an internet connection and a credit card. This democratization is not merely reducing costs, it is fundamentally expanding the population of people and organizations capable of participating in the AI revolution, with implications for innovation, competition, and the distribution of technological capabilities that will reverberate for decades to come.